We’ve compiled a listing of probably the most used and helpful APIs which can be in-built to the usual Node.js runtime. For every module you’ll discover easy english explanations and examples that will help you perceive.

This information has been tailored from my course Node.js: Novice to Ninja. Test it out there to comply with complete course to construct your individual multi-user actual time chat software. It additionally contains quizzes, movies, code to run your individual docker containers.

When constructing your first Node.js software it’s useful to know what utilities and APIs node gives out of the field to assist with widespread use instances and improvement wants.

Helpful Node.js APIs

- Course of: Retrieve data on surroundings variables, args, CPU utilization and reporting.

- OS: Retrieve OS and system associated data that Node is operating on: CPUs, Working system model, house directories, and so forth.

- Util: A group of helpful and customary strategies that assist with decoding textual content, kind checking and evaluating objects.

- URL: Simply create and parse URLs.

- File System API: Work together with the file system to create, learn, replace, and delete information, directories, and permissions.

- Occasions: For emitting and subscribing to occasions in Node.js. Works equally to client-side occasion listeners.

- Streams: Used to course of giant quantities of information in smaller and extra manageable chunks to keep away from reminiscence points.

- Employee Threads: Used to separate the execution of features on separate threads to keep away from bottleneck. Helpful for CPU-intensive JavaScript operations.

- Youngster Processes: Permits you to run sub-processes that you may monitor and terminate as mandatory.

- Clusters: Let you fork any variety of an identical processes throughout cores to deal with the load extra effectively.

Course of

The course of object gives details about your Node.js software in addition to management strategies. Use it to get data like surroundings variables, and CPU and Reminiscence utilization. course of is accessible globally: you should use it with out import, though the Node.js documentation recommends you explicitly reference it:

import course of from 'course of';course of.argvreturns an array the place the primary two gadgets are the Node.js executable path and the script identify. The merchandise at index 2 is the primary argument handed.course of.env: returns an object containing surroundings identify/worth pairs—reminiscent ofcourse of.env.NODE_ENV.course of.cwd(): returns the present working listing.course of.platform: returns a string figuring out the working system:'aix','darwin'(macOS),'freebsd','linux','openbsd','sunos', or'win32'(Home windows).course of.uptime(): returns the variety of seconds the Node.js course of has been operating.course of.cpuUsage(): returns the consumer and system CPU time utilization of the present course of—reminiscent of{ consumer: 12345, system: 9876 }. Move the thing again to the strategy to get a relative studying.course of.memoryUsage(): returns an object describing reminiscence utilization in bytes.course of.model: returns the Node.js model string—reminiscent of18.0.0.course of.report: generates a diagnostic report.course of.exit(code): exits the present software. Use an exit code of0to point success or an acceptable error code the place mandatory.

OS

The os API is similar to course of (see the “Course of” part above), however it might additionally return details about the Working System Node.js is operating in. This gives data reminiscent of what OS model, CPUs and up time.

os.cpus(): returns an array of objects with details about every logical CPU core. The “Clusters” part beneath referencesos.cpus()to fork the method. On a 16-core CPU, you’d have 16 cases of your Node.js software operating to enhance efficiency.os.hostname(): the OS host identify.os.model(): a string figuring out the OS kernel model.os.homedir(): the complete path of the consumer’s house listing.os.tmpdir(): the complete path of the working system’s default non permanent file listing.os.uptime(): the variety of seconds the OS has been operating.

Util

The util module gives an assortment of helpful JavaScript strategies. One of the helpful is util.promisify(operate), which takes an error-first callback model operate and returns a promise-based operate. The Util module may also assist with widespread patterns like decoding textual content, kind checking, and inspecting objects.

import util from 'util';

util.sorts.isDate( new Date() );

util.sorts.isMap( new Map() );

util.sorts.isRegExp( /abc/ );

util.sorts.isAsyncFunction( async () => {} ); URL

URL is one other world object that permits you to safely create, parse, and modify net URLs. It’s actually helpful for rapidly extracting protocols, ports, parameters and hashes from URLs with out resorting to regex. For instance:

{

href: 'https://instance.org:8000/path/?abc=123#goal',

origin: 'https://instance.org:8000',

protocol: 'https:',

username: '',

password: '',

host: 'instance.org:8000',

hostname: 'instance.org',

port: '8000',

pathname: '/path/',

search: '?abc=123',

searchParams: URLSearchParams { 'abc' => '123' },

hash: '#goal'

}You may view and alter any property. For instance:

myURL.port = 8001;

console.log( myURL.href );

You may then use the URLSearchParams API to change question string values. For instance:

myURL.searchParams.delete('abc');

myURL.searchParams.append('xyz', 987);

console.log( myURL.search );

There are additionally strategies for changing file system paths to URLs and again once more.

The dns module gives identify decision features so you may lookup the IP tackle, identify server, TXT data, and different area data.

File System API

The fs API can create, learn, replace, and delete information, directories, and permissions. Current releases of the Node.js runtime present promise-based features in fs/guarantees, which make it simpler to handle asynchronous file operations.

You’ll typically use fs together with path to resolve file names on totally different working techniques.

The next instance module returns details about a file system object utilizing the stat and entry strategies:

import { constants as fsConstants } from 'fs';

import { entry, stat } from 'fs/guarantees';

export async operate getFileInfo(file) {

const fileInfo = {};

attempt {

const data = await stat(file);

fileInfo.isFile = data.isFile();

fileInfo.isDir = data.isDirectory();

}

catch (e) {

return { new: true };

}

attempt {

await entry(file, fsConstants.R_OK);

fileInfo.canRead = true;

}

catch (e) {}

attempt {

await entry(file, fsConstants.W_OK);

fileInfo.canWrite = true;

}

catch (e) {}

return fileInfo;

}When handed a filename, the operate returns an object with details about that file. For instance:

{

isFile: true,

isDir: false,

canRead: true,

canWrite: true

}The principle filecompress.js script makes use of path.resolve() to resolve enter and output filenames handed on the command line into absolute file paths, then fetches data utilizing getFileInfo() above:

#!/usr/bin/env node

import path from 'path';

import { readFile, writeFile } from 'fs/guarantees';

import { getFileInfo } from './lib/fileinfo.js';

let

enter = path.resolve(course of.argv[2] || ''),

output = path.resolve(course of.argv[3] || ''),

[ inputInfo, outputInfo ] = await Promise.all([ getFileInfo(input), getFileInfo(output) ]),

error = [];The code validates the paths and terminates with error messages if mandatory:

if (outputInfo.isDir && outputInfo.canWrite && inputInfo.isFile) {

output = path.resolve(output, path.basename(enter));

}

if (!inputInfo.isFile || !inputInfo.canRead) error.push(`can not learn enter file ${ enter }`);

if (enter === output) error.push('enter and output information can't be the identical');

if (error.size) dir]');

console.error('n ' + error.be a part of('n '));

course of.exit(1);

The entire file is then learn right into a string named content material utilizing readFile():

console.log(`processing ${ enter }`);

let content material;

attempt {

content material = await readFile(enter, { encoding: 'utf8' });

}

catch (e) {

console.log(e);

course of.exit(1);

}

let lengthOrig = content material.size;

console.log(`file dimension ${ lengthOrig }`);JavaScript common expressions then take away feedback and whitespace:

content material = content material

.substitute(/ns+/g, 'n')

.substitute(///.*?n/g, '')

.substitute(/s+/g, ' ')

.substitute(//*.*?*//g, '')

.substitute(/<!--.*?-->/g, '')

.substitute(/s*([<>(){}}[]])s*/g, '$1')

.trim();

let lengthNew = content material.size;The ensuing string is output to a file utilizing writeFile(), and a standing message exhibits the saving:

let lengthNew = content material.size;

console.log(`outputting ${output}`);

console.log(`file dimension ${ lengthNew } - saved ${ Math.spherical((lengthOrig - lengthNew) / lengthOrig * 100) }%`);

attempt {

content material = await writeFile(output, content material);

}

catch (e) {

console.log(e);

course of.exit(1);

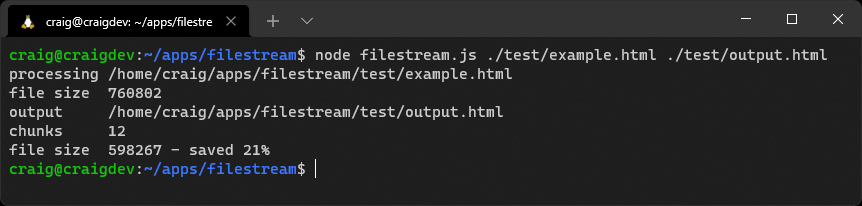

}Run the venture code with an instance HTML file:

node filecompress.js ./check/instance.html ./check/output.htmlOccasions

You typically must execute a number of features when one thing happens. For instance, a consumer registers in your app, so the code should add their particulars to a database, begin a brand new logged-in session, and ship a welcome electronic mail. The Occasions module :

async operate userRegister(identify, electronic mail, password) {

attempt {

await dbAddUser(identify, electronic mail, password);

await new UserSession(electronic mail);

await emailRegister(identify, electronic mail);

}

catch (e) {

}

}This collection of operate calls is tightly coupled to consumer registration. Additional actions incur additional operate calls. For instance:

attempt {

await dbAddUser(identify, electronic mail, password);

await new UserSession(electronic mail);

await emailRegister(identify, electronic mail);

await crmRegister(identify, electronic mail);

await emailSales(identify, electronic mail);

}You might have dozens of calls managed on this single, ever-growing code block.

The Node.js Occasions API gives another option to construction the code utilizing a publish–subscribe sample. The userRegister() operate can emit an occasion—maybe named newuser —after the consumer’s database report is created.

Any variety of occasion handler features can subscribe and react to newuser occasions; there’s no want to vary the userRegister() operate. Every handler runs independently of the others, so they might execute in any order.

Occasions in Consumer-side JavaScript

Occasions and handler features are incessantly utilized in client-side JavaScript—for instance, to run a operate when the consumer clicks a component:

doc.getElementById('myelement').addEventListener('click on', e => {

console.dir(e);

});In most conditions, you’re attaching handlers for consumer or browser occasions, though you may increase your individual customized occasions. Occasion dealing with in Node.js is conceptually related, however the API is totally different.

Objects that emit occasions have to be cases of the Node.js EventEmitter class. These have an emit() technique to boost new occasions and an on() technique for attaching handlers.

The occasion instance venture gives a category that triggers a tick occasion on predefined intervals. The ./lib/ticker.js module exports a default class that extends EventEmitter:

import EventEmitter from 'occasions';

import { setInterval, clearInterval } from 'timers';

export default class extends EventEmitter {Its constructor should name the mum or dad constructor. It then passes the delay argument to a begin() technique:

constructor(delay) {

tremendous();

this.begin(delay);

}The begin() technique checks delay is legitimate, resets the present timer if mandatory, and units the brand new delay property:

begin(delay) {

if (!delay || delay == this.delay) return;

if (this.interval) {

clearInterval(this.interval);

}

this.delay = delay;It then begins a brand new interval timer that runs the emit() technique with the occasion identify "tick". Subscribers to this occasion obtain an object with the delay worth and variety of seconds for the reason that Node.js software began:C

this.interval = setInterval(() => {

this.emit('tick', {

delay: this.delay,

time: efficiency.now()

});

}, this.delay);

}

}The principle occasion.js entry script imports the module and units a delay interval of 1 second (1000 milliseconds):Copy

import Ticker from './lib/ticker.js';

const ticker = new Ticker(1000);It attaches handler features triggered each time a tick occasion happens:

ticker.on('tick', e => {

console.log('handler 1 tick!', e);

});

ticker.on('tick', e => {

console.log('handler 2 tick!', e);

});A 3rd handler triggers on the primary tick occasion solely utilizing the as soon as() technique:

ticker.as soon as('tick', e => {

console.log('handler 3 tick!', e);

});Lastly, the present variety of listeners is output:

console.log(`listeners: ${ ticker.listenerCount('tick') }`);Run the venture code with node occasion.js.

The output exhibits handler 3 triggering as soon as, whereas handler 1 and a couple of run on each tick till the app is terminated.

Streams

The file system instance code above (within the “File System” part) reads a complete file into reminiscence earlier than outputting the minified end result. What if the file was bigger than the RAM obtainable? The Node.js software would fail with an “out of reminiscence” error.

The answer is streaming. This processes incoming knowledge in smaller, extra manageable chunks. A stream will be:

- readable: from a file, a HTTP request, a TCP socket, stdin, and so forth.

- writable: to a file, a HTTP response, TCP socket, stdout, and so forth.

- duplex: a stream that’s each readable and writable

- rework: a duplex stream that transforms knowledge

Every chunk of information is returned as a Buffer object, which represents a fixed-length sequence of bytes. You could must convert this to a string or one other acceptable kind for processing.

The instance code has a filestream venture which makes use of a rework stream to handle the file dimension downside within the filecompress venture. As earlier than, it accepts and validates enter and output filenames earlier than declaring a Compress class, which extends Rework:

import { createReadStream, createWriteStream } from 'fs';

import { Rework } from 'stream';

class Compress extends Rework {

constructor(opts) {

tremendous(opts);

this.chunks = 0;

this.lengthOrig = 0;

this.lengthNew = 0;

}

_transform(chunk, encoding, callback) {

const

knowledge = chunk.toString(),

content material = knowledge

.substitute(/ns+/g, 'n')

.substitute(///.*?n/g, '')

.substitute(/s+/g, ' ')

.substitute(//*.*?*//g, '')

.substitute(/<!--.*?-->/g, '')

.substitute(/s*([<>(){}}[]])s*/g, '$1')

.trim();

this.chunks++;

this.lengthOrig += knowledge.size;

this.lengthNew += content material.size;

this.push( content material );

callback();

}

}The _transform technique is known as when a brand new chunk of information is prepared. It’s obtained as a Buffer object that’s transformed to a string, minified, and output utilizing the push() technique. A callback() operate is known as as soon as chunk processing is full.

The applying initiates file learn and write streams and instantiates a brand new compress object:

const

readStream = createReadStream(enter),

writeStream = createWriteStream(output),

compress = new Compress();

console.log(`processing ${ enter }`)The incoming file learn stream has .pipe() strategies outlined, which feed the incoming knowledge by means of a collection of features which will (or could not) alter the contents. The information is piped by means of the compress rework earlier than that output is piped to the writeable file. A ultimate on('end') occasion handler operate executes as soon as the stream has ended:

readStream.pipe(compress).pipe(writeStream).on('end', () => {

console.log(`file dimension ${ compress.lengthOrig }`); console.log(`output ${ output }`); console.log(`chunks readStream.pipe(compress).pipe(writeStream).on('end', () => {

console.log(`file dimension ${ compress.lengthOrig }`);

console.log(`output ${ output }`);

console.log(`chunks ${ compress.chunks }`);

console.log(`file dimension ${ compress.lengthNew } - saved ${ Math.spherical((compress.lengthOrig - compress.lengthNew) / compress.lengthOrig * 100) }%`);

});Run the venture code with an instance HTML file of any dimension:

node filestream.js ./check/instance.html ./check/output.html

This can be a small demonstration of Node.js streams. Stream dealing with is a posh subject, and you could not use them typically. In some instances, a module reminiscent of Specific makes use of streaming underneath the hood however abstracts the complexity from you.

You also needs to pay attention to knowledge chunking challenges. A piece could possibly be any dimension and cut up the incoming knowledge in inconvenient methods. Take into account minifying this code:

<script kind="module">

console.log('loaded');

</script>Two chunks might arrive in sequence:

<script kind="module">

And:

<script>

console.log('loaded');

</script>Processing every chunk independently leads to the next invalid minified script:

<script kind="module">script console.log('loaded');</script>The answer is to pre-parse every chunk and cut up it into complete sections that may be processed. In some instances, chunks (or components of chunks) might be added to the beginning of the following chunk.

Minification is finest utilized to complete strains, though an additional complication happens as a result of <!-- --> and /* */ feedback can span multiple line. Right here’s a potential algorithm for every incoming chunk:

- Append any knowledge saved from the earlier chunk to the beginning of the brand new chunk.

- Take away any complete

<!--to-->and/*to*/sections from the chunk. - Cut up the remaining chunk into two components, the place

part2begins with the primary<!--or/*discovered. If both exists, take away additional content material frompart2aside from that image.If neither is discovered, cut up on the final carriage return character. If none is discovered, setpart1to an empty string andpart2to the entire chunk.Ifpart2turns into considerably giant—maybe greater than 100,000 characters as a result of there aren’t any carriage returns—appendpart2topart1and setpart2to an empty string. This can guarantee saved components can’t develop indefinitely. - Minify and output

part1. - Save

part2(which is added to the beginning of the following chunk).

The method runs once more for every incoming chunk.

That’s your subsequent coding problem— when you’re prepared to simply accept it!

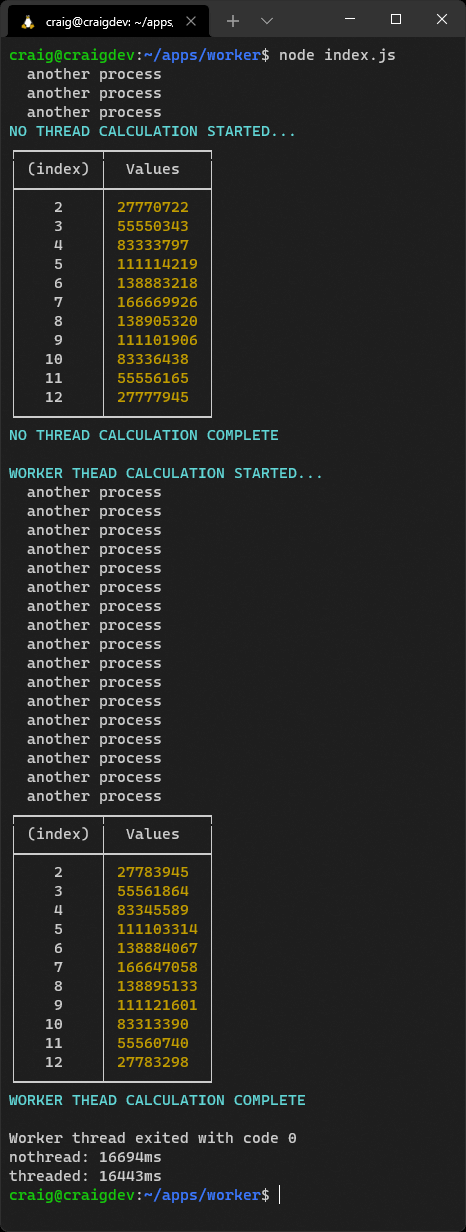

Employee Threads

From the docs: “Employees (threads) are helpful for performing CPU-intensive JavaScript operations. They don’t assist a lot with I/O-intensive work. The Node.js built-in asynchronous I/O operations are extra environment friendly than Employees will be”.

Assume a consumer might set off a posh, ten-second JavaScript calculation in your Specific software. The calculation would turn into a bottleneck that halted processing for all customers. Your software can’t deal with any requests or run different features till it completes.

Asynchronous Calculations

Complicated calculations that course of knowledge from a file or database could also be much less problematic, as a result of every stage runs asynchronously because it waits for knowledge to reach. Processing happens on separate iterations of the occasion loop.

Nonetheless, long-running calculations written in JavaScript alone—reminiscent of picture processing or machine-learning algorithms—will hog the present iteration of the occasion loop.

One answer is employee threads. These are much like browser net staff and launch a JavaScript course of on a separate thread. The principle and employee thread can change messages to set off or terminate processing.

Employees and Occasion Loops

Employees are helpful for CPU-intensive JavaScript operations, though the principle Node.js occasion loop ought to nonetheless be used for asynchronous I/O actions.

The instance code has a employee venture that exports a diceRun() operate in lib/cube.js. This throws any variety of N-sided cube numerous instances and data a rely of the full rating (which ought to lead to a Regular distribution curve):

export operate diceRun(runs = 1, cube = 2, sides = 6) {

const stat = [];

whereas (runs > 0) {

let sum = 0;

for (let d = cube; d > 0; d--) {

sum += Math.flooring( Math.random() * sides ) + 1;

}

stat[sum] = (stat[sum] || 0) + 1;

runs--;

}

return stat;

}The code in index.js begins a course of that runs each second and outputs a message:

const timer = setInterval(() => {

console.log(' one other course of');

}, 1000);Two cube are then thrown one billion instances utilizing a normal name to the diceRun() operate:

import { diceRun } from './lib/cube.js';

const

numberOfDice = 2,

runs = 999_999_999;

const stat1 = diceRun(runs, numberOfDice);This halts the timer, as a result of the Node.js occasion loop can’t proceed to the following iteration till the calculation completes.

The code then tries the identical calculation in a brand new Employee. This masses a script named employee.js and passes the calculation parameters within the workerData property of an choices object:

import { Employee } from 'worker_threads';

const employee = new Employee('./employee.js', { workerData: { runs, numberOfDice } });Occasion handlers are hooked up to the employee object operating the employee.js script so it might obtain incoming outcomes:

// end result returned

employee.on('message', end result => {

console.desk(end result);

});… and deal with errors:

employee.on('error', e => {

console.log(e);

});… and tidy up as soon as processing has accomplished:

employee.on('exit', code => {

});The employee.js script begins the diceRun() calculation and posts a message to the mum or dad when it’s full—which is obtained by the "message" handler above:

import { workerData, parentPort } from 'worker_threads';

import { diceRun } from './lib/cube.js';

const stat = diceRun( workerData.runs, workerData.numberOfDice );

parentPort.postMessage( stat );The timer isn’t paused whereas the employee runs, as a result of it executes on one other CPU thread. In different phrases, the Node.js occasion loop continues to iterate with out lengthy delays.

Run the venture code with node index.js.

It’s best to notice that the worker-based calculation runs barely sooner as a result of the thread is totally devoted to that course of. Think about using staff when you encounter efficiency bottlenecks in your software.

Youngster Processes

It’s typically essential to name purposes which can be both not written in Node.js or have a threat of failure.

A Actual-world Instance

I labored on an Specific software that generated a fuzzy picture hash used to determine related graphics. It ran asynchronously and labored nicely—till somebody uploaded a malformed GIF containing a round reference (animation frameA referenced frameB which referenced frameA).

The hash calculation by no means ended. The consumer gave up and tried importing once more. And once more. And once more. The entire software ultimately crashed with reminiscence errors.

The issue was mounted by operating the hashing algorithm in a toddler course of. The Specific software remained steady as a result of it launched, monitored, and terminated the calculation when it took too lengthy.

The baby course of API permits you to run sub-processes that you may monitor and terminate as mandatory. There are three choices:

spawn: spawns a toddler course of.fork: a particular kind of spawn that launches a brand new Node.js course of.exec: spawns a shell and runs a command. The result’s buffered and returned to a callback operate when the method ends.

In contrast to employee threads, baby processes are impartial from the principle Node.js script and may’t entry the identical reminiscence.

Clusters

Is your 64-core server CPU under-utilized when your Node.js software runs on a single core? Clusters let you fork any variety of an identical processes to deal with the load extra effectively.

The preliminary major course of can fork itself—maybe as soon as for every CPU returned by os.cpus(). It could possibly additionally deal with restarts when a course of fails, and dealer communication messages between forked processes.

Clusters work amazingly nicely, however your code can turn into advanced. Easier and extra sturdy choices embody:

Each can begin, monitor, and restart a number of remoted cases of the identical Node.js software. The applying will stay energetic even when one fails.

Write Stateless Functions

It’s value mentioning: make your software stateless to make sure it might scale and be extra resilient. It ought to be potential to start out any variety of cases and share the processing load.

Abstract

This text has supplied a pattern of the extra helpful Node.js APIs, however I encourage you to browse the documentation and uncover them for your self. The documentation is usually good and exhibits easy examples, however it may be terse in locations.

As talked about, this information is predicated on my course Node.js: Novice to Ninja which is accessible on SitePoint Premium.